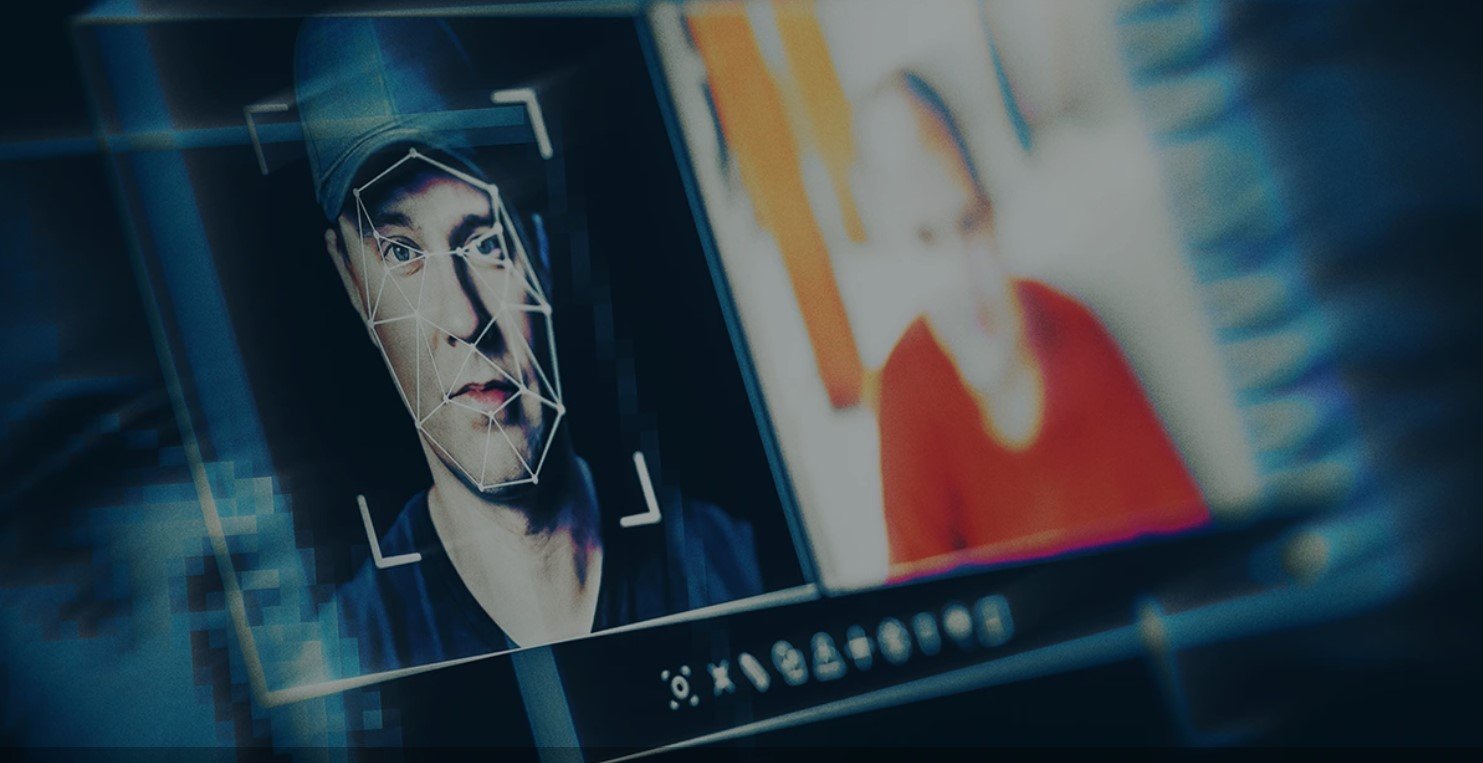

Deepfakes are poised to be the biggest AI-related threat because of the vast potential for misuse.

Criminals have yet to plumb their full potential, and we predict they will use deepfakes in new scams and criminal schemes in 2025. Popular or common social engineering scams will become even more believable with the use of deepfakes, while LLM trained on a person’s public posts can mimic their writing style, knowledge and personality.

These AI-enabled techniques make for dangerously convincing impersonations to target unwitting victims. We also predict the continuation of AI-based semi-automated scams. For corporations, BEC and ”fake employee” scams should be the most concerning. Bypass-KYC-as-a-service has been popular in the underground for a few years already, sustained by three elements: unintentionally exposed biometrics, leaked and breached PII (particularly from ransomware attacks), and the growing capabilities of AI. This avenue of attack will continue for scammers.

Eminent cybersecurity leader, today warned that highly customized, AI-powered attacks could supercharge scams, phishing and influence operations in 2025 and beyond.

Sharda Tickoo, Country Manager for India & SAARC, Trend Micro: “As generative AI makes its way ever deeper into enterprises and the societies they serve, we need to be alert to the threats. Hyper-personalized attacks and agent AI subversion will require industry-wide effort to root out and address. Business leaders should remember that there’s no such thing as standalone cyber risk today. All security risk is ultimately business risk, with the potential to impact future strategy profoundly.”

Trend’s 2025 predictions report warns of the potential for malicious “digital twins,” where breached/leaked personal information (PII) is used to train an LLM to mimic the knowledge, personality, and writing style of a victim/employee. When deployed in combination with deepfake video/audio and compromised biometric data, they could be used to convince identity fraud or to “honeytrap” a friend, colleague, or family member.

Deepfakes and AI could also be leveraged in large-scale, hyper-personalized attacks to:

· Enhance business compromise (BEC/BPC) and “fake employee” scams at scale.

· Identify pig butchering victims.

· Lure and romance these victims before handing them off to a human operator, who can chat via the “personality filter” of an LLM.

· Improved open-source intelligence gathering by adversaries.

· Capability development in pre-attack prep will improve attack success.

· Create authentic-seeming social media personas at scale to spread mis/disinformation and scams.

Elsewhere, businesses that adopt AI in greater numbers in 2025 will need to be on the lookout for threats such as:

· Vulnerability exploitation and hijacking of AI agents to manipulate them into performing harmful or unauthorized actions.

· Unintended information leakage (from GenAI)

· Benign or malicious system resource consumption by AI agents, leading to denial of service.

Outside the world of AI threats

The report highlights additional areas for concern in 2025, including:

Vulnerabilities

· Memory management and memory corruption bugs, vulnerability chains, and exploits targeting APIs

· More container escapes

· Older, simpler vulnerabilities like cross-site scripting (XSS) and SQL injections

· The potential for a single vulnerability in a widely adopted system to ripple across multiple models and manufacturers, such as a connected vehicle ECU

Ransomware

Threat actors will respond to advances in endpoint detection and response (EDR) tooling by:

· Creating kill chains that use locations where most EDR tools aren’t installed (e.g., cloud systems or mobile, edge, and IoT devices)

· Disabling AV and EDR altogether

· Using bring your own vulnerable driver (BYOVD) techniques.

· Hiding shellcodes inside inconspicuous loaders

· Redirecting Windows subsystem execution to compromise EDR/AV detection.

The result will be faster attacks with fewer steps in the kill chain that are harder to detect.

Time for action

In response to these escalating threats and an expanding corporate attack surface, Trend recommends:

· Implementing a risk-based approach to cybersecurity, enabling centralized identification of diverse assets and effective risk assessment/prioritization/

· Harnessing AI to assist with threat intelligence, asset profile management, attack path prediction, and remediation guidance—ideally from a single platform.

· Updating user training and awareness in line with recent AI advances and how they enable cybercrime.

· Monitoring and securing AI technology against abuse, including security for input and response validation or actions generated by AI

· For LLM security: hardening sandbox environments, implementing strict data validation, and deploying multi-layered defenses against prompt injection

· Understanding the organization’s position within the supply chain, addressing vulnerabilities in public-facing servers, and implementing multi-layered defenses within internal networks

· Facilitating end-to-end visibility into AI agents

· Implementing Attack Path Prediction to mitigate cloud threats